[ad_1]

The Our on-line world Administration of China (CAC) has proposed a brand new set of draft laws to manipulate AI-aided synthesis techniques, together with deepfakes, digital actuality scenes, textual content technology, audio, and different sub-sectors of AI media synthesis – a area wherein China produces a prodigious variety of educational papers and revolutionary analysis tasks every month.

A publish (Google Translation, unique right here) on the official web site of the CAC units out the proposed obligations, and characterizes affected providers as ‘deep synthesis service suppliers’, inviting residents to take part by contributing feedback on the draft proposals, with a deadline of twenty eighth February.

Not Simply Deepfakes

Although the instructed laws have been reported within the phrases of their potential influence on the creation and dissemination of deepfakes, the doc makes an attempt an all-encompassing purview over the flexibility of algorithms to generate any kind of content material that could possibly be interpreted within the broadly understood sense of ‘media’.

Article 2 declares the projected scope of the laws throughout six sectors*:

(1) Methods for producing or modifying textual content content material, resembling chapter technology, textual content type conversion, and question-and-answer dialogue;

(2) Applied sciences for producing or modifying voice content material, resembling text-to-speech, voice conversion, and voice attribute modifying;

(3) Applied sciences for producing or modifying non-voice content material, resembling music technology and scene sound modifying;

(4) Face technology, face alternative, character attribute modifying, face manipulation, gesture manipulation, and different applied sciences for producing or modifying biometric options resembling faces in photos and video content material;

(5) Methods for modifying non-biological options in photos and video content material, resembling picture enhancement and picture restoration;

(6) Applied sciences for producing or modifying digital scenes resembling 3D reconstruction.

Deepfake Regulation

China criminalized the usage of AI for the spreading of pretend information on the finish of 2019, at which period the CAC expressed concern concerning the potential implications of deepfake know-how, prompting many to think about that the Chinese language authorities would ultimately ban deepfake know-how outright.

Nonetheless, this could entail China formally abandoning one of the politically and culturally important developments within the historical past of media technology, AI, and even politics, and chopping itself off from the advantages of world and open scientific collaboration.

Due to this fact, it appears that evidently China is now decided to experiment with controlling slightly than banning the doubtless rogue know-how, which, many consider, will finally migrate out of its porn accelerator part and right into a respectable and exploitable set of use instances, notably in leisure.

NeRF Included

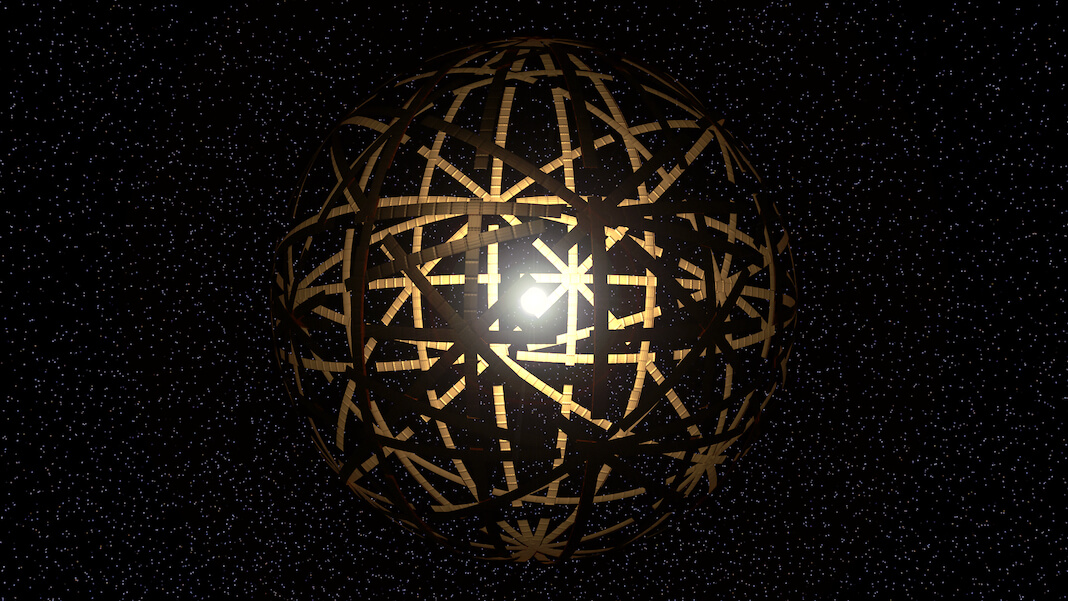

Article 2.6 addresses the technology or modifying of digital scenes, resembling 3D reconstruction, a extra nascent know-how than deepfake impersonation, and one which has achieved essentially the most prominence over the previous two years via the arrival of Neural Radiance Fields (NeRF), the place photogrammetry is used to synthesize complete scenes within the explorable latent house of machine studying fashions.

Nonetheless, NeRF is quickly increasing its attain out from tableaux of fashions and walk-throughs of environments into the technology of full-body video, with Chinese language researchers having superior some main improvements on this respect.

China’s ST-NeRF in motion.

Although NeRF has produced a blizzard of latest analysis since its announcement in 2020, its implementation in VR or AR techniques, or its suitability in visible results pipelines, nonetheless has many notable challenges and technological bottlenecks to traverse. NeRF’s rising means to reconstitute and edit full human physiognomies has but to include any of the usual identity-transforming deepfake capabilities which have characterised information headlines during the last two years.

Audio Deepfakes a Precedence?

If one is to take Article 2’s listing order as a sign of the deep synthesis applied sciences which China is most involved to manage and regulate, this could recommend that text-based AI-generated pretend information is of major concern, with voice synthesis forward of video deepfakes when it comes to its potential influence.

If that’s the case, this accords with the truth that deepfake video has but for use in any crime not associated to pornography (Asia has not hesitated to criminalize deepfake porn), whereas deepfake audio has been posited as an energetic know-how in at the least two main monetary crimes, within the UK in 2019, and within the United Arab Emirates in 2021.

The brand new draft laws oblige customers wishing to use an individual’s identification by way of the usage of machine studying techniques to hunt written permission from the person. Moreover, synthesized media should show some type of ‘distinguished’ brand or watermark, or different means by which the particular person consuming the fabric might be made to know that the content material is altered or fully fabricated. It isn’t fully clear how this may be achieved within the case of audio deepfakes.

Registering

If ratified, the draft proposals would obligate deep synthesis service suppliers to register their pertinent purposes with the state, in accordance with the present Provisions on the Administration of Algorithm Suggestions for Web Info Companies, and to adjust to all essential submitting procedures. Deep synthesis suppliers will even be required to cooperate freely when it comes to supervision and inspection, and to supply ‘essential technical and knowledge assist and help’ on request.

Additional, such suppliers might want to set up user-friendly portals for criticism, and to publish anticipated closing dates on the processing of such complaints, in addition to being required to supply ‘rumor-refuting mechanisms’ – although the proposals don’t present element in regard to the implementation of this.

Infractions might immediate fines of between 10,000 and 100,000 yuan ($1,600 and $16,000), in addition to expose offending corporations to civil and legal lawsuits.

* Translation by Google Translate, see hyperlink early in article.

First printed twenty eighth January 2022.

[ad_2]