Internal links on a website are a crucial organic ranking factor for Google. Links help Google discover pages and assign rankings based on quantity and location. A page with 100 internal links is likely to have a higher priority than one with a single link.

But neither purpose – discovery and rankings – is possible if Googlebot cannot crawl the links. This can be done in three ways:

- Links behind JavaScript. Google can usually crawl and render links in JavaScript, e.g. B. Tabs and collapsible sections. But not always, especially when the JavaScript needs to be executed first.

- Links on a desktop version but not on a mobile. By default, Google indexes the mobile version of a website. However, mobile sites are often scaled-down desktop versions with far fewer links, preventing Google from discovering and indexing these excluded pages.

- links with a do not follow attribute or meta tag. Google claims it can follow links do not follow attributes, but there’s no way of knowing if that happened. And the meta tag only blocks crawls when Googlebot responds to them. In addition, many website owners are not aware that it is active do not follow Attributes or meta tags, especially if they use a plugin like Yoast that adds these features with a single click.

Even if a page is indexed, you can never be sure that the links to or from that page can be crawled and thus pass link equity.

Here are three ways to ensure Googlebot can crawl links on your site.

Tools for checking links

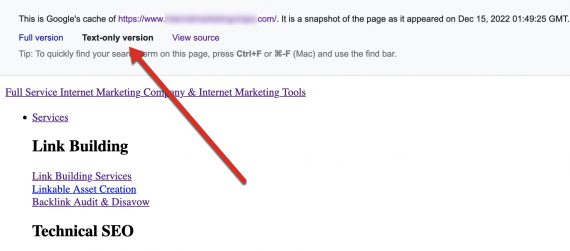

Text cache from Google. The plain text version of Google Cache represents how Google sees a page with CSS and JavaScript disabled. it is Not how Google indexes a page, since it can now understand those pages as humans see them.

Therefore, a page’s text cache is a stripped-down version. Still, it’s the most reliable way to tell if Google can crawl your links. If these links are in the plain text cache, Google can crawl them.

Beyond the plain text, Google Cache contains the indexed version of a page. It’s a handy way to identify missing items in the mobile version.

Many search optimizers ignore Google Cache. This is a mistake. All essential ranking elements are present. There is no other way to ensure that Google has this key information.

To access the Google Cache text-only version of any page, search Google Cache:[full-URL] and click Plain Text Version.

To access the text-only version of Google Cache, search Google for cache:[full-URL] and click Plain Text Version. Click on the image to enlarge it.

—

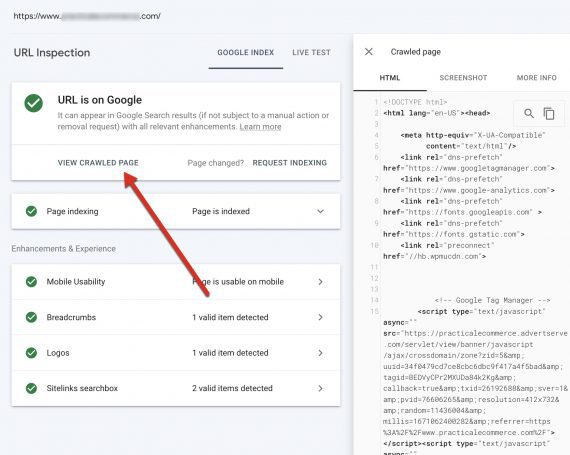

Not all pages are displayed in the Google cache. If a page isn’t there, use Check URL in Search Console or browser extensions to get details on how Google is rendering it.

“URL Inspection” in Search Console displays each page as Google understands it. Enter the URL, then click View Crawled Page.

From there, copy the HTML that Google uses to read the page. Paste this HTML code into a document like Google Docs and search (CTRL+F on Windows or CMD+F on Mac) for the link URLs to check. If the URLs are included in the HTML code, Google can see them.

The “URL check” in Search Console shows each page as Google understands it. Enter the URL, then click View Crawled Page. Click on the image to enlarge it.

Browser Extensions. After confirming that Google can see the links, make sure they can be crawled. Examining the code will reveal both the do not follow attribute and the meta tag. Firefox has a native tool to load a page’s HTML code via CTRL+U on Windows and CMD+U on Mac. Then look for “nofollow” in the code.

The NoFollow browser extension – available for Firefox and Chrome – highlights do not follow Links on page load – in an attribute and a meta tag.

Not definitive

None of these methods will definitively tell you whether the links will affect rankings. Google’s algorithm is sophisticated and assigns importance and weight to links at will, including ignoring. Nonetheless, visiting and crawling links is Googe’s first step.