[ad_1]

(metamorworks/Shutterstock)

Autonomous and semi-autonomous autos are more and more frequent, with most relying totally on AI-powered cameras that quickly detect autos, folks, and obstacles inside the body and use that info (amongst different info, like depth sensor information) to function or increase the operation of a automobile. The AI fashions used on this course of, after all, are skilled with coaching datasets. However, Stanford researchers defined in a current weblog put up, there’s an issue: “Sadly, many datasets are rife with errors!” In that weblog put up, they outlined how their staff—composed of Stanford researchers Daniel Kang, Nikos Arechiga, Sudeep Pillai, Peter Bailis, and Matei Zaharia—used new instruments to detect errors in these datasets.

Any errors in these sorts of datasets can pose severe issues, as a result of the AI fashions are evaluated for a way they stack up in opposition to these coaching datasets. The researchers demonstrated the issue by citing a public autonomous automobile dataset from an otherwise-unidentified “main labeling vendor that has produced labels for a lot of autonomous automobile corporations” the place “over 70% of the validation scenes comprise no less than one lacking object field!”

To detect these errors, the researchers developed an abstraction methodology known as realized statement assertions (LOA). “LOA is an abstraction designed to seek out errors in ML deployment pipelines with as little handbook specification of error varieties as doable,” they wrote. “LOA achieves this [by] permitting customers to specify options over ML pipelines.”

The staff created an instance LOA system, known as Fixy, for instance the method. “Fixy learns function distributions that specify possible and unlikely values (e.g., {that a} velocity of 30mph is probably going however 300mph is unlikely),” reads the summary of the paper. “It then makes use of these function distributions to attain labels for potential errors.”

The researchers demonstrated how Fixy was used to establish an unlabeled motorbike in a coaching dataset.

“We are able to specify the next options over the information: field quantity, object velocity, and a function that selects solely model-predicted packing containers that don’t overlap with a human label,” the weblog defined. “These options are computed deterministically with quick code snippets from the human labels and ML mannequin predictions. Fixy will then execute on the brand new information and produce a rank-ordered record of doable errors.”

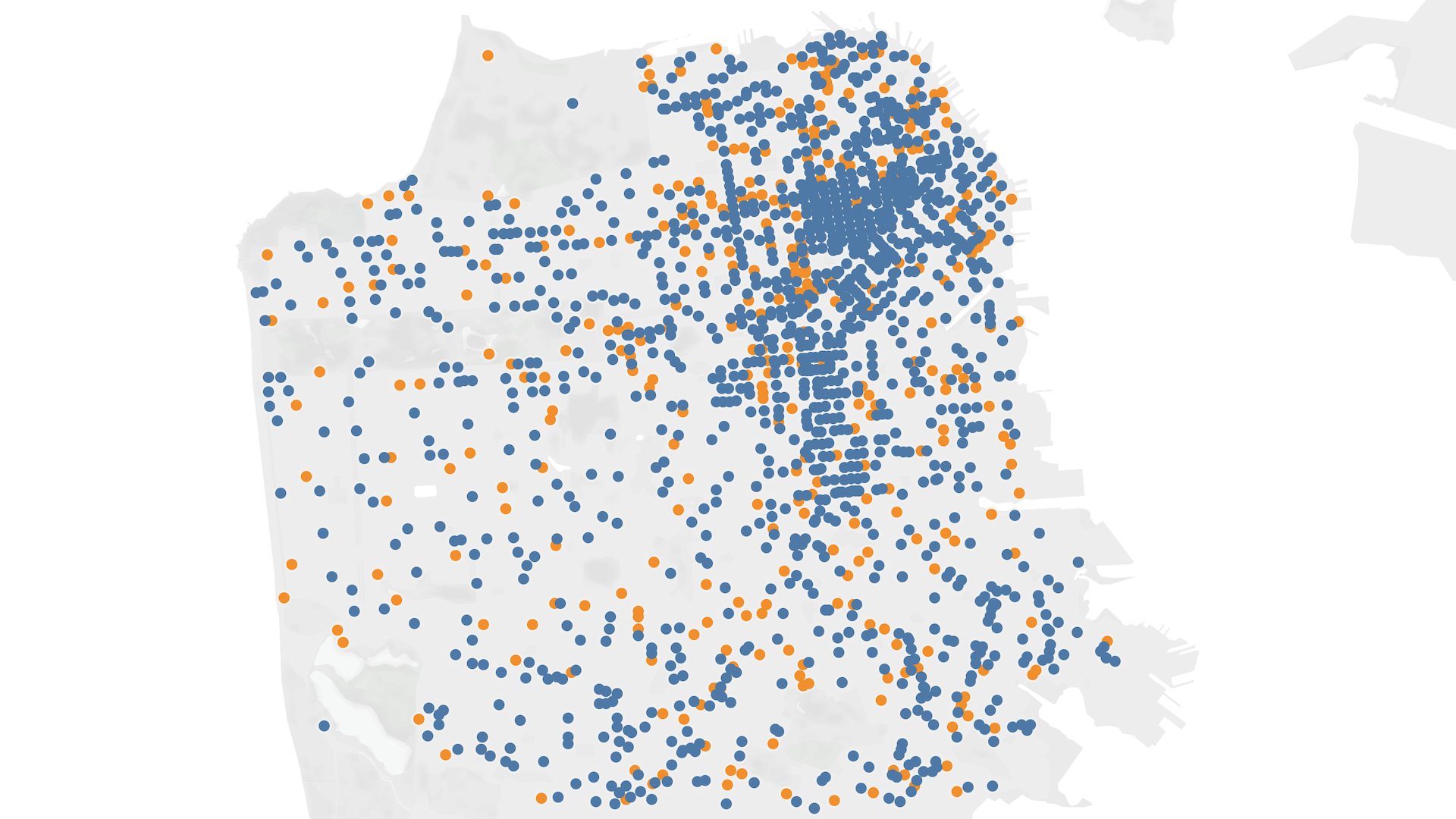

The staff evaluated Fixy in opposition to Lyft’s Stage 5 notion dataset and a dataset from the Toyota Analysis Institute. “LOA was additionally capable of finding errors in each single validation scene that had an error, which reveals the utility of utilizing a device like LOA,” they wrote. Additional, LOA was capable of finding 75% of the whole errors recognized inside a specific scene from the Toyota dataset.

To be taught extra, learn the weblog put up right here.

[ad_2]