[ad_1]

On this weblog, we discover learn how to leverage Databricks’ highly effective jobs API with Amazon Managed Apache Airflow (MWAA) and combine with Cloudwatch to watch Directed Acyclic Graphs (DAG) with Databricks-based duties. Moreover, we are going to present learn how to create alerts primarily based on DAG efficiency metrics.

Earlier than we get into the how-to part of this steering, let’s rapidly perceive what are Databricks job orchestration and Amazon Managed Airflow (MWAA)?

Databricks orchestration and alerting

Job orchestration in Databricks is a completely built-in function. Prospects can use the Jobs API or UI to create and handle jobs and options, comparable to e-mail alerts for monitoring. With this highly effective API-driven method, Databricks jobs can orchestrate something that has an API ( e.g., pull information from a CRM). Databricks orchestration can assist jobs with single or multi-task choice, in addition to newly added jobs with Delta Stay Tables.

Amazon Managed Airflow

Amazon Managed Workflows for Apache Airflow (MWAA) is a managed orchestration service for Apache Airflow. MWAA manages the open-source Apache Airflow platform on the purchasers’ behalf with the safety, availability, and scalability of AWS. MWAA offers clients extra advantages of straightforward integration with AWS Companies and quite a lot of third-party providers by way of pre-existing plugins, permitting clients to create complicated information processing pipelines.

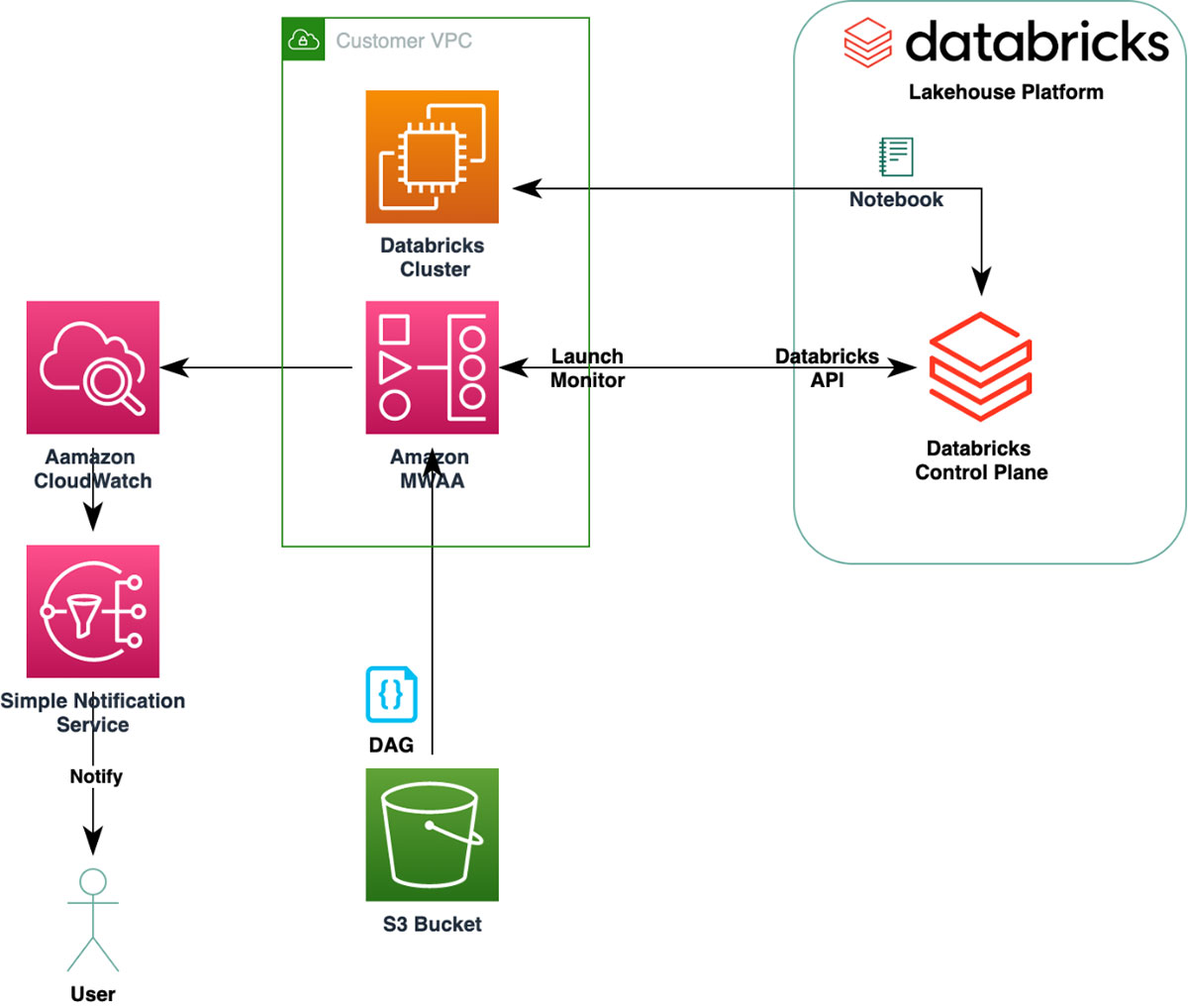

Excessive-Degree structure diagram

We are going to create a easy DAG that launches a Databricks Cluster and executes a pocket book. MWAA screens the execution. Be aware: we’ve a easy job definition, however MWAA can orchestrate quite a lot of complicated workloads.

Organising the surroundings

The weblog assumes you will have entry to Databricks workspace. Join a free one right here and configure a Databricks cluster. Moreover, create an API token for use to configure connection in MWAA.

To create an MWAA surroundings comply with these directions.

The right way to create a Databricks connection

Step one is to configure the Databricks connection in MWAA.

Instance DAG

Subsequent add your DAG into the S3 bucket folder you specified when creating the MWAA surroundings. Your DAG will mechanically seem on the MWAA UI.

Right here’s an instance of an Airflow DAG, which creates configuration for a brand new Databricks jobs cluster, Databricks pocket book process, and submits the pocket book process for execution in Databricks.

from airflow import DAG from airflow.suppliers.databricks.operators.databricks import DatabricksSubmitRunOperator, DatabricksRunNowOperator from datetime import datetime, timedelta #Outline params for Submit Run Operator new_cluster = { 'spark_version': '7.3.x-scala2.12', 'num_workers': 2, 'node_type_id': 'i3.xlarge', "aws_attributes": { "instance_profile_arn": "arn:aws:iam::XXXXXXX:instance-profile/databricks-data-role" } } notebook_task = { 'notebook_path': '/Customers/xxxxx@XXXXX.com/check', } #Outline params for Run Now Operator notebook_params = { "Variable":5 } default_args = { 'proprietor': 'airflow', 'depends_on_past': False, 'email_on_failure': False, 'email_on_retry': False, 'retries': 1, 'retry_delay': timedelta(minutes=2) } with DAG('databricks_dag', start_date=datetime(2021, 1, 1), schedule_interval="@every day", catchup=False, default_args=default_args ) as dag: opr_submit_run = DatabricksSubmitRunOperator( task_id='submit_run', databricks_conn_id='databricks_default', new_cluster=new_cluster, notebook_task=notebook_task ) opr_submit_runGet the most recent model of the file from the GitHub hyperlink.

Set off the DAG in MWAA.

As soon as triggered you possibly can see the job cluster on the Databricks cluster UI web page.

Troubleshooting

Amazon MWAA makes use of Amazon CloudWatch for all Airflow logs. These are useful troubleshooting DAG failures.

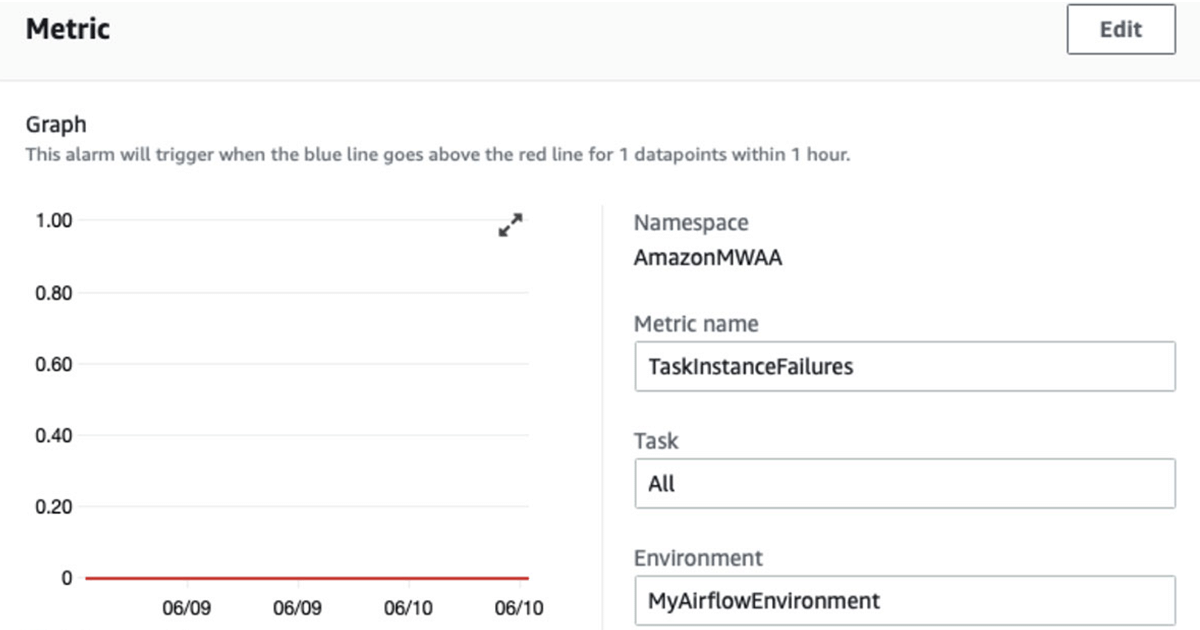

CloudWatch metrics and alerts

Subsequent, we create a metric to watch the profitable completion of the DAG. Amazon MWAA helps many metrics.

We use TaskInstanceFailures to create an alarm.

For threshold we choose zero ( i.e., we need to be notified when there are any failures over a interval of 1 hour).

Lastly, we choose an E-mail notification because the motion.

Right here’s an instance of the Cloudwatch E-mail notification generated when the DAG fails.

You might be receiving this e-mail as a result of your Amazon CloudWatch Alarm “DatabricksDAGFailure” within the US East (N. Virginia) area has entered the ALARM state, as a result of “Threshold Crossed

Conclusion

On this weblog, we confirmed learn how to create an Airflow DAG that creates, configures, and submits a brand new Databricks jobs cluster, Databricks pocket book process, and the pocket book process for execution in Databricks. We leverage MWAA’s out-of-the-box integration with CloudWatch to watch our instance workflow and obtain notifications when there are failures.

What’s subsequent

Code Repo

MWAA-DATABRICKS Pattern DAG Code

[ad_2]