[ad_1]

We’re excited for the discharge of Delta Connectors 0.3.0, which introduces help for writing Delta tables. The important thing options on this launch are:

Delta Standalone

- Write performance – This launch introduces new APIs to help creating and writing Delta tables with out Apache Spark™. Exterior processing engines can write Parquet knowledge recordsdata after which use the APIs to commit the recordsdata to the Delta desk atomically. Following the Delta Transaction Log Protocol, the implementation makes use of optimistic concurrency management to handle a number of writers, mechanically generates checkpoint recordsdata, and manages log and checkpoint cleanup in line with the protocol. The primary Java class uncovered is

OptimisticTransaction, which is accessed by way ofDeltaLog.startTransaction(). OptimisticTransaction.markFilesAsRead(readPredicates)have to be used to learn all metadata through the transaction (and never theDeltaLog. It’s used to detect concurrent updates and decide if logical conflicts between this transaction and previously-committed transactions could be resolved.OptimisticTransaction.commit(actions, operation, engineInfo)is used to commit modifications to the desk. If a conflicting transaction has been dedicated first (see above) an exception is thrown, in any other case, the desk model that was dedicated is returned.- Idempotent writes could be carried out utilizing

OptimisticTransaction.txnVersion(appId)to examine for model will increase dedicated by the identical utility. - Every commit should specify the

Operationbeing carried out by the transaction. - Transactional ensures for concurrent writes on Microsoft Azure and Amazon S3. This launch contains customized extensions to help concurrent writes on Azure and S3 storage programs, which on their very own should not have the required atomicity and sturdiness ensures. Please be aware that transactional ensures are solely offered for concurrent writes on S3 from a single cluster.

- Reminiscence-optimized iterator implementation for studying recordsdata in a snapshot:

DeltaScanintroduces an iterator implementation for studying theAddFilesin a snapshot with help for partition pruning. It may be accessed by way ofSnapshot.scan()orSnapshot.scan(predicate), the latter of which filters recordsdata based mostly on thepredicateand any partition columns within the file metadata. This API considerably reduces the reminiscence footprint when studying the recordsdata in a snapshot and instantiating aDeltaLog(as a consequence of inside utilization).

- Partition filtering for metadata reads and battle detection in writes: This launch introduces a easy expression framework for partition pruning in metadata queries. When studying recordsdata in a snapshot, filter the returned

AddFileson partition columns by passing apredicateintoSnapshot.scan(predicate). When updating a desk throughout a transaction, specify which partitions had been learn by passing areadPredicateintoOptimisticTransaction.markFilesAsRead(readPredicate)to detect logical conflicts and keep away from transaction conflicts when potential.

- Miscellaneous updates:

DeltaLog.getChanges()exposes an incremental metadata modifications API. VersionLog wraps the model quantity and the record of actions in that model.ParquetSchemaConverterconverts aStructTypeschema to a Parquet schema.- Repair #197 for

RowRecordin order that values in partition columns could be learn. - Miscellaneous bug fixes.

Delta Connectors

The Delta Standalone venture in Delta connectors, previously often known as Delta Standalone Reader (DSR), is a JVM library that can be utilized to learn and write Delta Lake tables. Not like Delta Lake Core, this venture doesn’t use Spark to learn or write tables and has just a few transitive dependencies. It may be utilized by any utility that can’t use a Spark cluster (learn extra: Learn how to Natively Question Your Delta Lake with Scala, Java, and Python). The venture permits builders to construct a Delta connector for an exterior processing engine following the Delta protocol with out utilizing a manifest file. The reader element ensures builders can learn the set of parquet recordsdata related to the Delta desk model requested. As a part of Delta Standalone 0.3.0, the reader features a memory-optimized, lazy iterator implementation for VERSION AS OF), and partition elimination utilizing the partition schema of the Delta desk. For extra particulars see the devoted README.md.

What’s Delta Standalone?

DeltaScan.getFiles (PR #194). The next code pattern reads Parquet recordsdata in a distributed method the place Delta Standalone (as of 0.3.0) contains Snapshot::scan(filter)::getFiles, which helps partition pruning and an optimized inside iterator implementation.

import io.delta.standalone.Snapshot;

DeltaLog log = DeltaLog.forTable(new Configuration(), "$TABLE_PATH$");

Snapshot latestSnapshot = log.replace();

StructType schema = latestSnapshot.getMetadata().getSchema();

DeltaScan scan = latestSnapshot.scan(

new And(

new And(

new EqualTo(schema.column("yr"), Literal.of(2021)),

new EqualTo(schema.column("month"), Literal.of(11))),

new EqualTo(schema.column("buyer"), Literal.of("XYZ"))

)

);

CloseableIterator iter = scan.getFiles();

attempt {

whereas (iter.hasNext()) {

AddFile addFile = iter.subsequent();

// Zappy engine to deal with studying knowledge in `addFile.getPath()` and apply any `scan.getResidualPredicate()`

}

} lastly {

iter.shut();

}

As effectively, Delta Standalone 0.3.0 features a new author element that permits builders to generate parquet recordsdata themselves and add these recordsdata to a Delta desk atomically, with help for idempotent writes (learn extra: Delta Standalone Author design doc). The next code snippet exhibits decide to the transaction log so as to add the brand new recordsdata and take away the outdated incorrect recordsdata after writing Parquet recordsdata to storage.

import io.delta.standalone.Operation; import io.delta.standalone.actions.RemoveFile; import io.delta.standalone.exceptions.DeltaConcurrentModificationException; import io.delta.standalone.varieties.StructType; RecordremoveOldFiles = existingFiles.stream() .map(path -> addFileMap.get(path).take away()) .accumulate(Collectors.toList()); Record addNewFiles = newDataFiles.getNewFiles() .map(file -> new AddFile( file.getPath(), file.getPartitionValues(), file.getSize(), System.currentTimeMillis(), true, // isDataChange null, // stats null // tags ); ).accumulate(Collectors.toList()); Record totalCommitFiles = new ArrayList(); totalCommitFiles.addAll(removeOldFiles); totalCommitFiles.addAll(addNewFiles); // Zippy is in reference to a generic engine attempt { txn.commit(totalCommitFiles, new Operation(Operation.Title.UPDATE), "Zippy/1.0.0"); } catch (DeltaConcurrentModificationException e) { // deal with exception right here } Hive 3 utilizing Delta Standalone

Delta Standalone 0.3.0 helps Hive 2 and three permitting Hive to natively learn a Delta desk. The next is an instance of create a Hive exterior desk to entry your Delta desk.

CREATE EXTERNAL TABLE deltaTable(col1 INT, col2 STRING) STORED BY 'io.delta.hive.DeltaStorageHandler' LOCATION '/delta/desk/path'For extra particulars on arrange Hive, please consult with Delta Connectors > Hive Connector. It is very important be aware this connector solely helps Apache Hive; it doesn't help Apache Spark or Presto.

Studying Delta Lake from PrestoDB

As demonstrated in PrestoCon 2021 session Delta Lake Connector for Presto, the lately merged Presto/Delta connector makes use of the Delta Standalone venture to natively learn the Delta transaction log with out the necessity of a manifest file. The memory-optimized, lazy iterator included in Delta Standalone 0.3.0 permits PrestoDB to effectively iterate by means of the Delta transaction log metadata and avoids OOM points when studying massive Delta tables.

With the Presto/Delta connector, along with querying your Delta tables natively with Presto, you should utilize the

@syntax to carry out time journey queries and question earlier variations of your Delta desk by model or timestamp. The next code pattern is querying earlier variations of the identical NYCTaxi 2019 dataset utilizing model.# Model 1 of s3://…/nyctaxi_2019_part desk WITH nyctaxi_2019_part AS ( SELECT * FROM deltas3."$path$"."s3://…/nyctaxi_2019_part@v1) SELECT COUNT(1) FROM nyctaxi_2019_part; # output 59354546 # Model 5 of s3://…/nyctaxi_2019_part desk WITH nyctaxi_2019_part AS ( SELECT * FROM deltas3."$path$"."s3://…/nyctaxi_2019_part@v5) SELECT COUNT(1) FROM nyctaxi_2019_part; # output 78959576With this connector, you may each specify the desk out of your metastore and question the Delta desk straight from the file path utilizing the syntax of

deltas3."$path$"."s3://…For extra details about the PrestoDB/Delta connector:

Word, we're at the moment working with the Trino (right here’s the present department that accommodates the Trino 359 Delta Lake reader) and Athena communities to supply native Delta Lake connectivity.

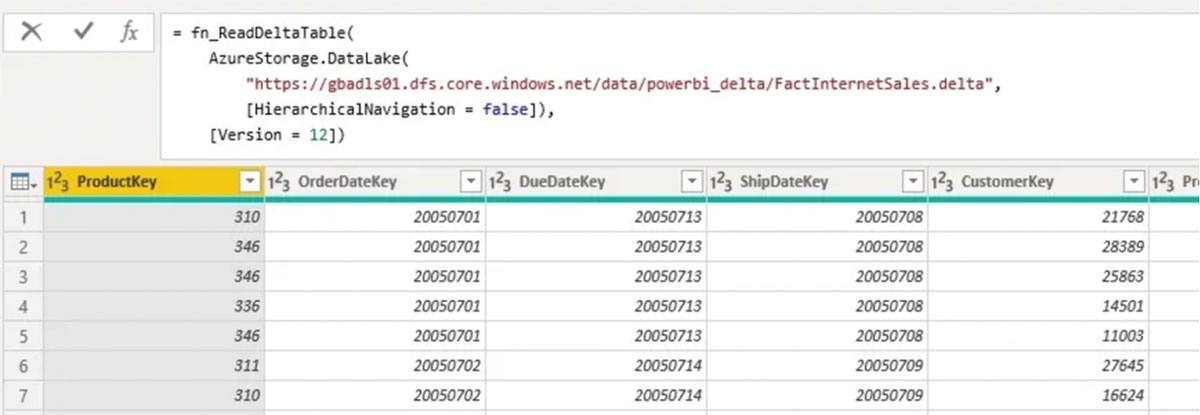

Studying Delta Lake from Energy BI Natively

We additionally needed to present a shout-out to Gerhard Brueckl (github: gbrueckl) for persevering with to enhance Energy BI connectivity to Delta Lake. As a part of Delta Connectors 0.3.0, the Energy BI connector contains on-line/scheduled refresh within the PowerBI service, help for Delta Lake time journey, and partition elimination utilizing the partition schema of the Delta desk.

Supply: Studying Delta Lake Tables natively in PowerBI For extra info, consult with Studying Delta Lake Tables natively in PowerBI or try the code-base.

Dialogue

We're actually excited in regards to the fast adoption of Delta Lake by the info engineering and knowledge sciences neighborhood. For those who’re curious about studying extra about Delta Standalone or any of those Delta connectors, try the next assets:

Credit

We wish to thank the next contributors for updates, doc modifications, and contributions in Delta Standalone 0.3.0: Alex, Allison Portis, Denny Lee, Gerhard Brueckl, Pawel Kubit, Scott Sandre, Shixiong Zhu, Wang Wei, Yann Byron, Yuhong Chen, and gurunath.

[ad_2]