Geospatial Analytics and AI with Databricks and CARTO –

This can be a collaborative submit by Databricks and CARTO. We thank Javier de la Torre, Founder and Chief Technique Officer at CARTO for his contributions.

At present, CARTO is asserting the beta launch of their new product referred to as the Spatial Extension for Databricks, which gives a easy set up and seamless integration with the Databricks Lakehouse platform, and with it, a broad and highly effective assortment of spatial evaluation capabilities on the lakehouse. CARTO is a cloud-native geospatial expertise firm, working with a number of the world’s largest corporations to drive location intelligence and insights.

One in all our joint prospects, JLL, is already leveraging the spatial capabilities of CARTO and the facility and scale of Databricks. As a world chief in actual property providers, JLL manages 4.6 billion sq. ft of property and amenities and handles 37,500 leasing transactions globally. Analyzing and understanding location knowledge is a basic driver for JLL’s success, and permits them to service the wants of essentially the most superior spatial knowledge scientists and actual property consultants within the subject.

To leverage location knowledge and analytics throughout your complete group, their knowledge staff must service essentially the most superior spatial knowledge scientists and actual property consultants within the subject.

JLL turned to CARTO to develop a few of their options (Gea, Valorem, Pix, CMQ), which might be utilized by their consultants for market evaluation and property valuation. The options required market localization for a number of international locations throughout the globe; entry to large knowledge and knowledge science (utilizing Databricks), in addition to a wealthy person expertise, have been key priorities to make sure consultants would undertake the device of their day-to-day.

By leveraging CARTO and Databricks collectively, JLL is ready to present an extremely superior infrastructure for knowledge scientists to carry out knowledge modeling on the fly, in addition to a platform to simply construct options for stakeholders throughout the enterprise. By unlocking first-class map-based knowledge visualizations and knowledge pipeline options by way of a single platform, JLL is ready to lower complexity, save time (and subsequently human assets) and keep away from errors within the DataOps, DevOps and GISops processes.

The answer has led to quicker deliveries on shopper mandates, prolonged marketing consultant information (past their conventional in-depth information of their areas) and model positioning for JLL as a extremely data-driven and location-aware agency in the true property business. Uncover the specifics by downloading the case research.

“CARTO Spatial Extension for Databricks represents an enormous advance on spatial platforms. With cloud native-push down queries to the Databricks Lakehouse platform, now we have now the very best analytics and mapping platform working collectively. With the volumes of knowledge we’re working proper now, no different resolution may match the efficiency and comfort of this cloud native strategy.” – Elena Rivas – Head of Engineering & Knowledge Science at JLL

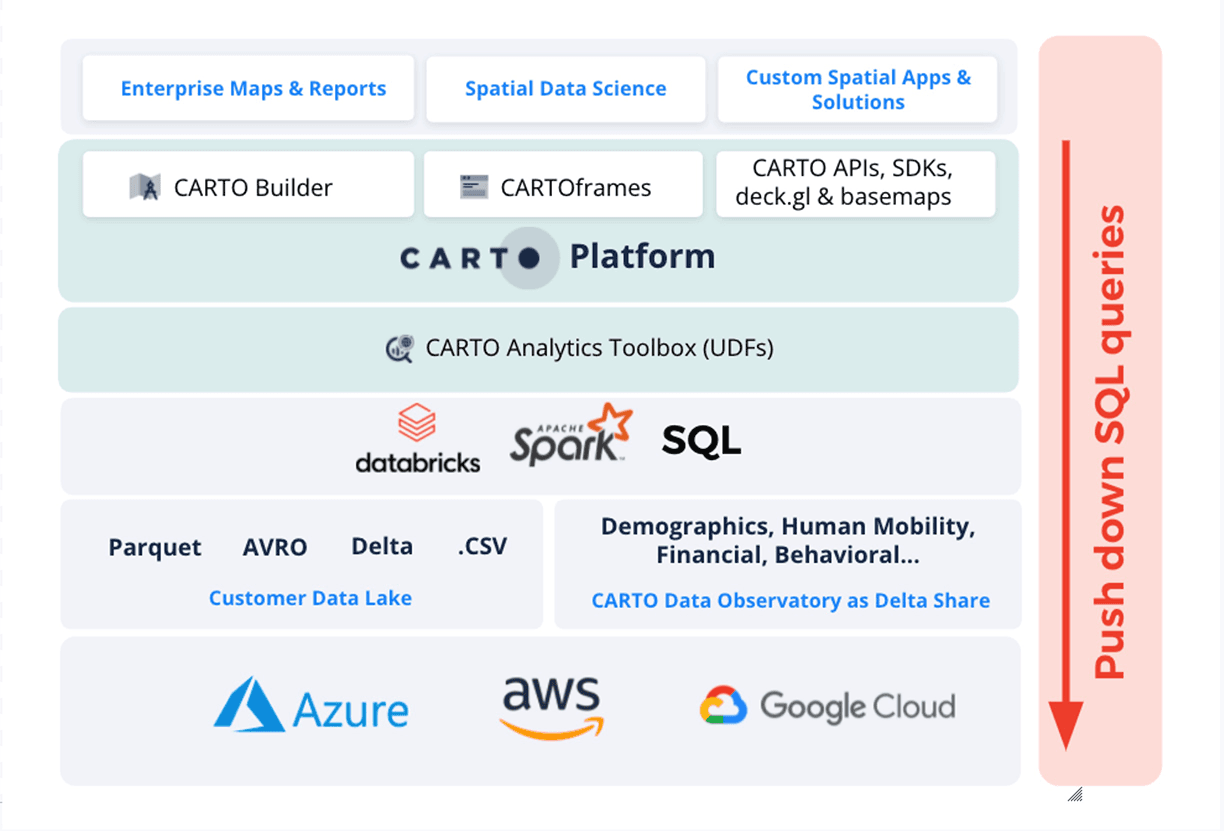

Bringing absolutely cloud-native spatial analytics to Databricks

CARTO extends Databricks to allow spatial workflows natively by enabling customers to:

- Import spatial knowledge into Databricks utilizing many spatial knowledge codecs, equivalent to geoJSON, shapefiles, kml, .csv, GeoPackages and extra.

- Carry out spatial analytics utilizing Spatial SQL just like PostGIS, however with the scalability of Apache SparkTM.

- Use CARTO Builder to create insightful maps from SQL, type and discover these geovisualizations with a full cartographic device.

- 4.Construct map functions on high of Databricks utilizing Google Maps or different suppliers, mixed with the facility of the deck.gl visualization library.

- 5.Entry greater than 10,000 curated location datasets, equivalent to demographics, human mobility or climate knowledge to counterpoint your spatial evaluation or apps utilizing Delta Sharing.

Spatially prolonged with Geomesa and the CARTO Analytics toolbox

CARTO extends Databricks utilizing Person Outlined Features (UDF) so as to add spatial help. Over the previous couple of months, the staff at CARTO and Azavea have been engaged on creating a brand new Open Supply library referred to as the CARTO Analytics Toolbox that exposes Geomesa spatial performance in a set of Spatial UDFs. Consider PostGIS for Spark.

CARTO must have this library out there in your Databricks cluster to push down spatial queries. Take a look at the documentation on the way to set up the Analytics Toolbox in your cluster.

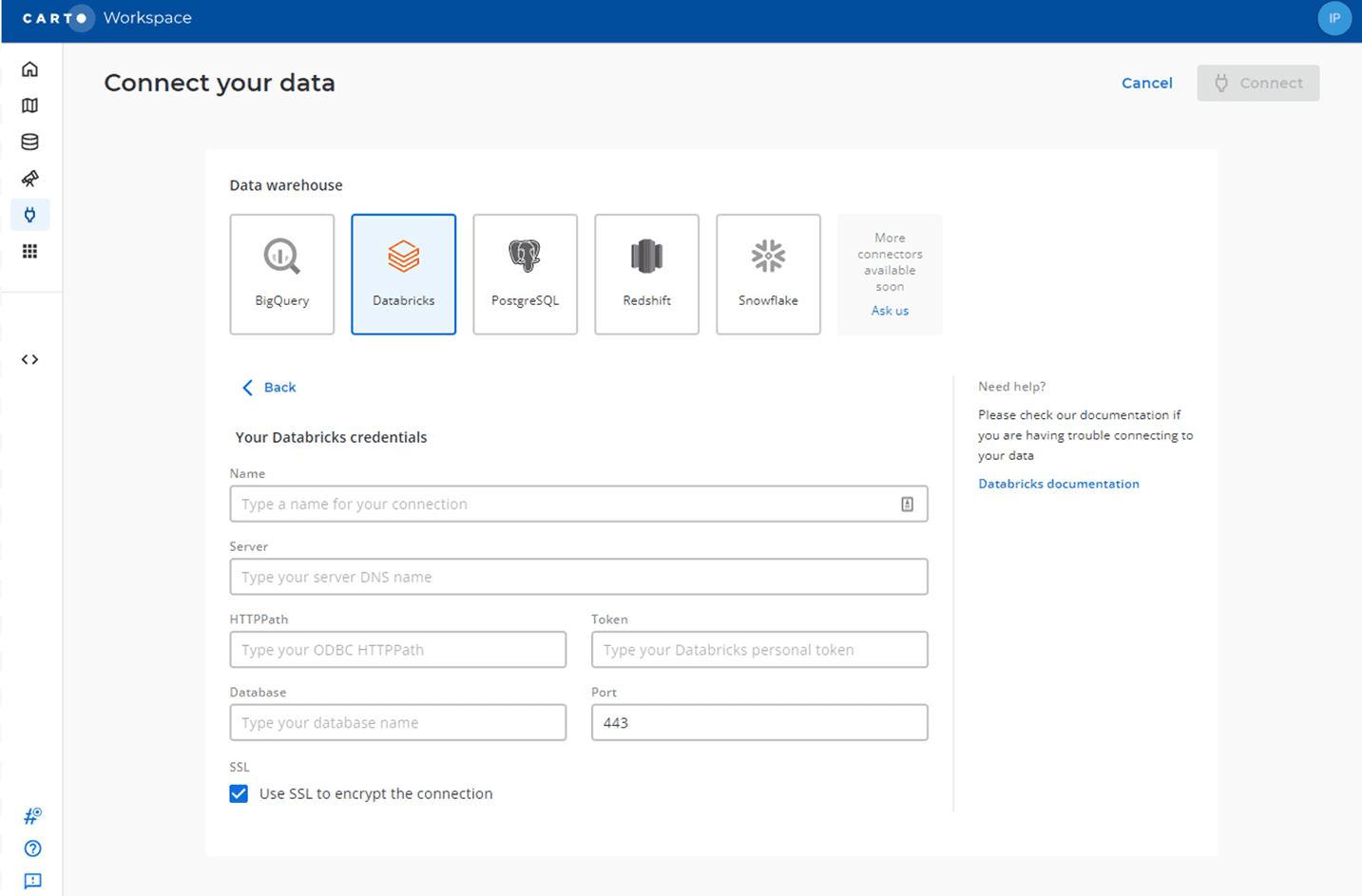

Now that now we have spatial help in our cluster we will go to CARTO and join it. You achieve this by navigating to the connections part and filling within the particulars to your ODBC connection.

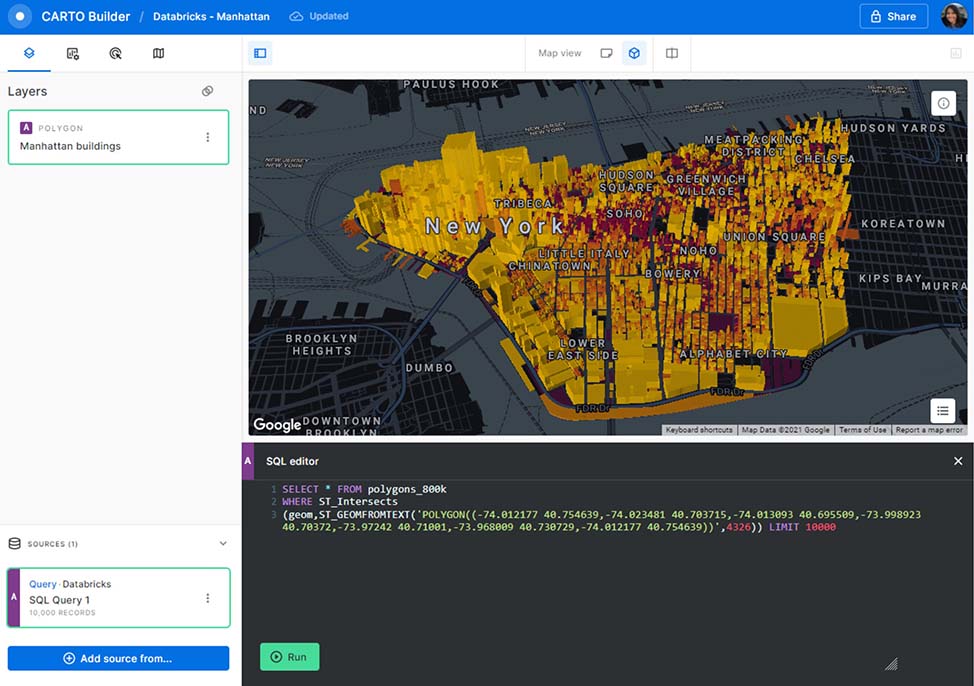

Write SQL, get maps

As soon as linked to Databricks we will discover spatial knowledge or construct a map from scratch. In CARTO you create a map by including layers outlined in SQL. This SQL is executed on a Databricks cluster dynamically – if knowledge modifications, the map updates routinely. Internally, CARTO checks the scale of the geographic knowledge and decides the best approach to switch knowledge, both as a single doc or as a set of tiles.

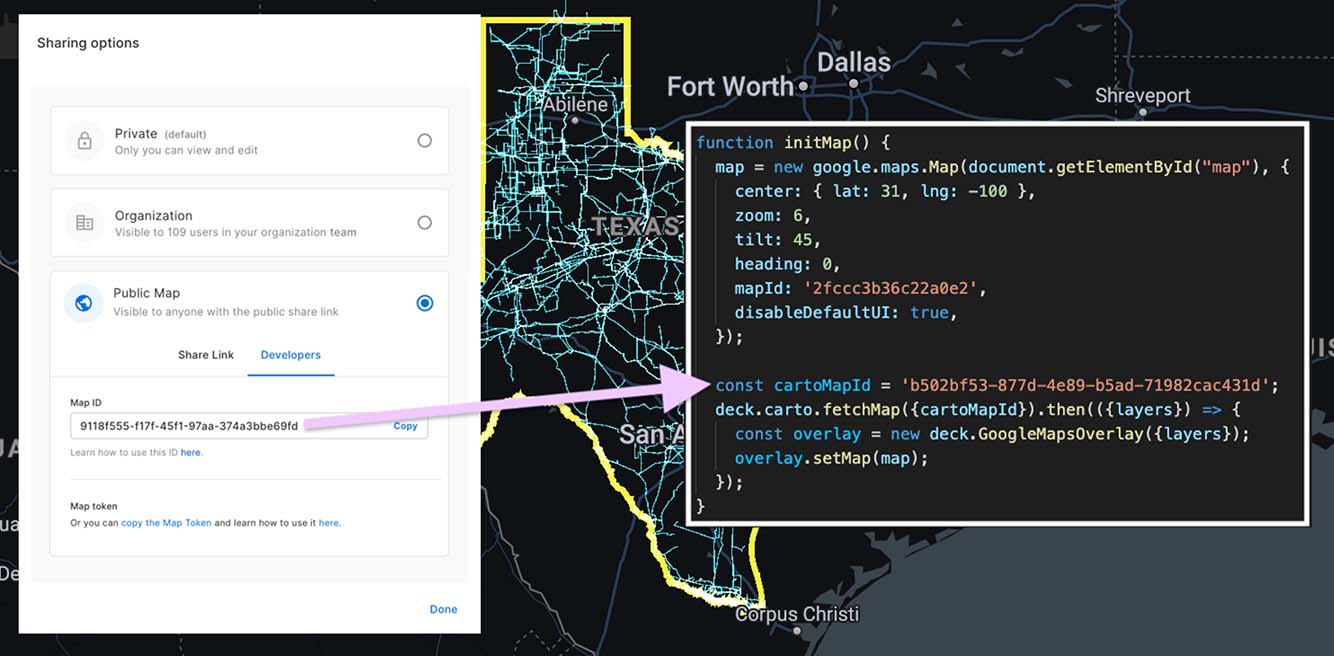

Constructing map functions on high of Databricks

Clients like JLL fairly often construct customized spatial functions that simplify both a spatial evaluation use case or present a extra direct interface to entry enterprise intelligence or info. CARTO facilitates the creation of those apps with a whole set of growth libraries and APIs.

For visualization, CARTO makes use of the highly effective deck.gl visualization library. You make the most of CARTO Builder to design your maps and then you definately reference them in your code. CARTO will deal with visualizing giant datasets, updating the maps, and every little thing in between.

All the things occurs someplace

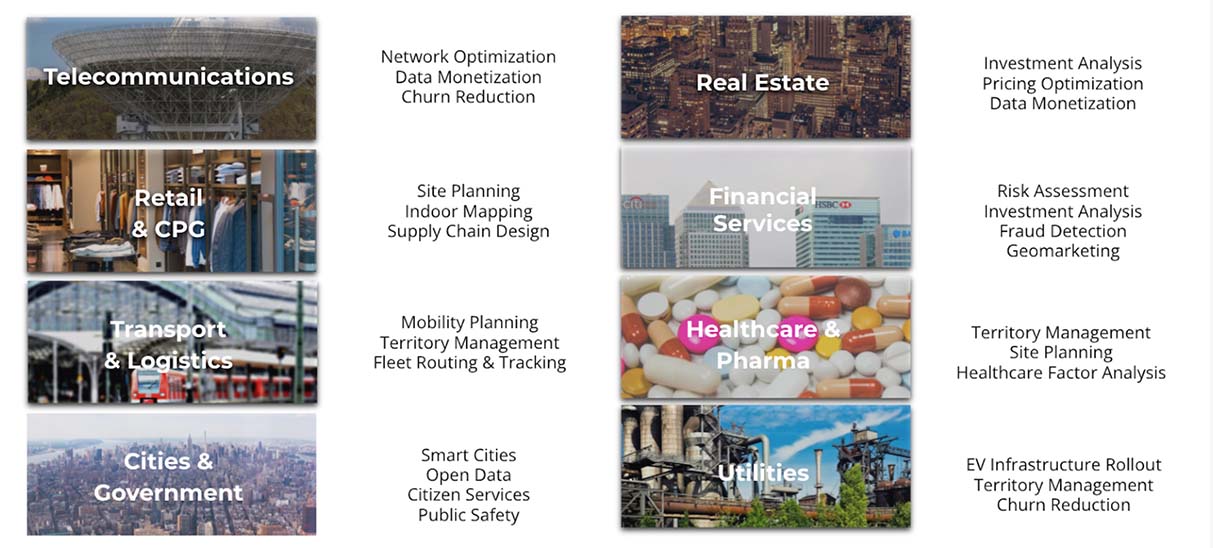

Location is a basic dimension for a lot of completely different analytical workflows. You could find it in lots of use instances in just about each vertical. Right here’s only a pattern of the sorts of issues CARTO prospects have been doing with Spatial Analytics.

In direction of full cloud-native help of CARTO in Databricks

Most of the largest organizations utilizing CARTO leverage Databricks for his or her analytics. With the facility of Spark and Delta Lake, linked with CARTO, it’s now potential to push down all spatial workflows to Databricks clusters. We see this as a serious step ahead for Spatial Analytics utilizing Large Knowledge.

With this beta launch of the CARTO Spatial Extension we’re offering the basic constructing blocks for Location Intelligence in Databricks. If you happen to work with an exterior GIS (geographic info system) in parallel with Databricks, this integration will present you the very best of each worlds.

Get began with Spatial Analytics in Databricks

If you want to check drive the beta CARTO Spatial Extension for Databricks, join a free 14-day trial at this time.

At Databricks, we’re excited to work with CARTO and supercharge geospatial evaluation at scale. This collaboration opens up location-based evaluation workflows for customers of our Lakehouse Platform to drive even higher choices throughout verticals and for a wealth of use instances.

If you happen to work with geospatial knowledge, you can be within the upcoming webinar Geospatial Evaluation and AI at Scale hosted by Databricks, Tuesday, December 14th. Register now.