Methods to Construct Scalable Actual-time Purposes on a Databricks Lakehouse with Confluent

For a lot of organizations, real-time information assortment and information processing at scale can present immense benefits for enterprise and operational insights. The necessity for real-time information introduces technical challenges that require expert professional expertise to construct customized integration for a profitable real-time implementation.

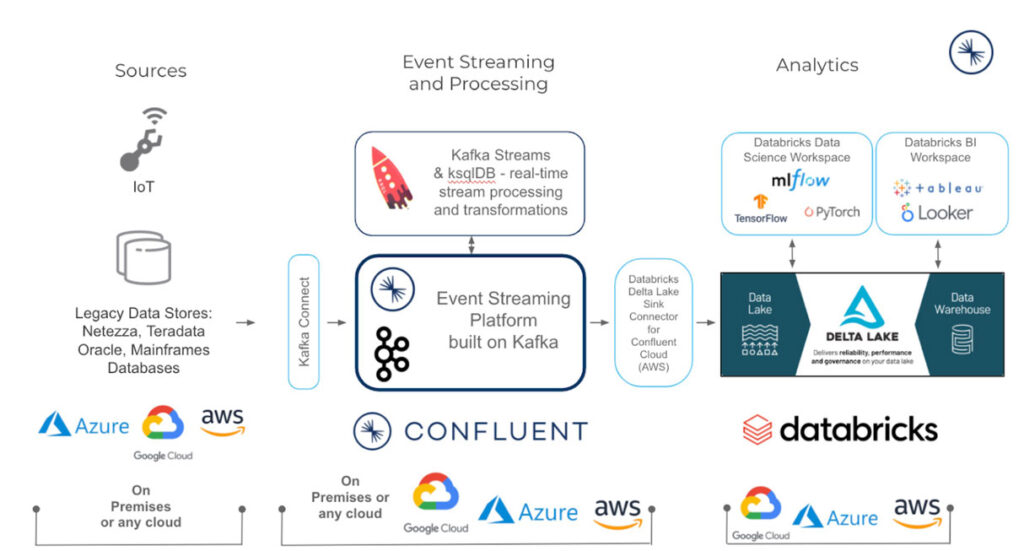

For patrons trying to implement streaming real-time purposes, our associate Confluent just lately introduced a brand new Databricks Connector for Confluent Cloud. This new fully-managed connector is designed particularly for the information lakehouse and supplies a strong answer to construct and scale real-time purposes resembling utility monitoring, web of issues (IoT), fraud detection, personalization and gaming leaderboards. Organizations can now use an built-in functionality that streams legacy and cloud information from Confluent Cloud straight into the Databricks Lakehouse for enterprise intelligence (BI), information analytics and machine studying use instances on a single platform.

Using the perfect of Databricks and Confluent

Streaming information via Confluent Cloud straight into Delta Lake on Databricks enormously reduces the complexity of writing handbook code to construct customized real-time streaming pipelines and internet hosting open supply Kafka, saving a whole lot of hours of engineering assets. Delta Lake supplies reliability that conventional information lakes lack, enabling organizations to run analytics straight on their information lake for as much as 50x quicker time-to-insights. As soon as streaming information is in Delta Lake, you may unify it with batch information to construct built-in information pipelines to energy your mission-critical purposes.

1. Streaming on-premises information for cloud analytics

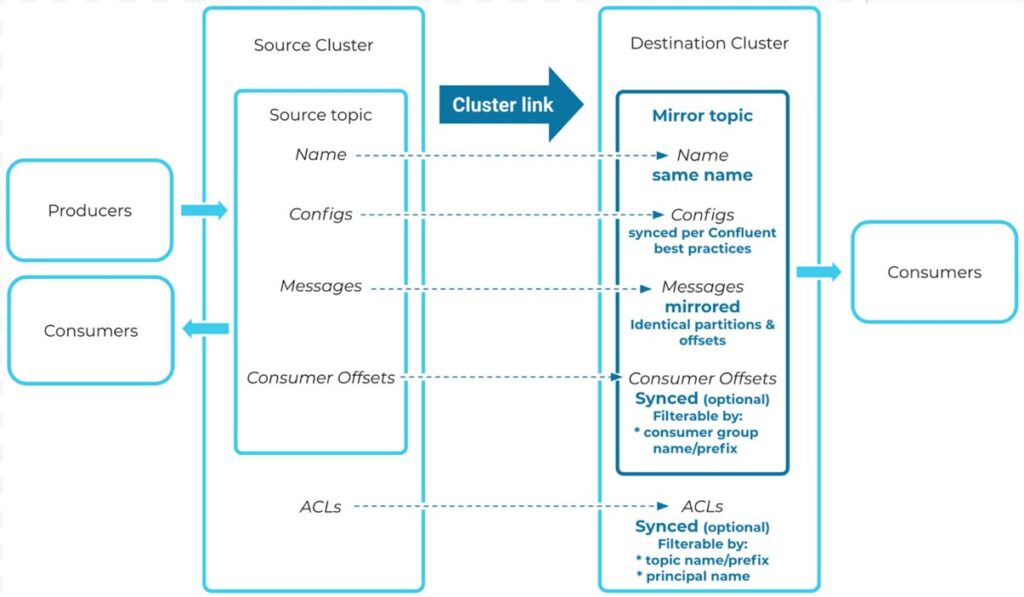

Information groups can migrate from legacy information platforms to the cloud or throughout clouds with Confluent and Databricks. Confluent leverages its Apache Kafka footprint to succeed in into on-premises Kafka clusters from Confluent Cloud to create an instantaneous cluster to cluster answer or supplies wealthy libraries of fully-managed or self-managed connectors for bringing real-time information into Delta Lake. Databricks gives the pace and scale to handle your real-time utility in manufacturing so you may meet your SLAs, enhance productiveness, make quick selections, simplify streaming operations and innovate.

2. Streaming information for analysts and enterprise customers utilizing SQL analytics

Relating to constructing business-ready BI reviews, querying information that’s recent and continually up to date is a problem. Processing information at relaxation and in movement requires completely different semantics and sometimes takes completely different ability units. Confluent gives CDC connectors for a number of databases that import probably the most present occasion datastreams to devour as tables in Databricks. For instance, a grocery supply service must mannequin a stream of purchaser availability information and mix it with real-time buyer orders to determine potential delivery delays. Utilizing Confluent and Databricks, organizations can prep, be a part of, enrich and question streaming information units in Databricks SQL to carry out blazingly quick analytics on stream information.

With as much as 12x higher price-performance than a conventional information warehouse, Databricks SQL unlocks 1000’s of optimizations to offer enhanced efficiency for real-time purposes. One of the best half? It comes with pre-built integrations with widespread BI instruments resembling Tableau and Energy BI so the stream information is prepared for first-class SQL improvement, permitting information analysts and enterprise customers to write down queries in a well-known SQL syntax and construct fast dashboards for significant insights.

3. Predictive analytics with ML fashions utilizing streaming information

Constructing predictive purposes utilizing ML fashions to attain historic information requires its personal toolset. Add real-time streaming information into the combo and the complexity turns into multifold because the mannequin now has to make predictions on new information because it is available in towards static, historic information units.

Confluent and Databricks may also help clear up this drawback. Rework streaming information the identical method you carry out computations on batch information by feeding probably the most up to date occasion streams from a number of information sources into your ML mannequin. Databricks’ collaborative Machine Studying answer standardizes the complete ML lifecycle from experimentation to manufacturing. The ML answer is constructed on Delta Lake so you may seize gigabytes of streaming supply information straight from Confluent Cloud into Delta tables to create ML fashions, question and collaborate on these fashions in real-time. There are a bunch of different Databricks options resembling Managed MLflow that automates experiment monitoring and Mannequin Registry for versioning and role-based entry controls. Basically, it streamlines cross-team collaboration so you may deploy real-time streaming information based mostly operational purposes in manufacturing — at scale and low latency.

Getting Began with Databricks and Confluent Cloud

To get began with the connector, you will have entry to Databricks and Confluent Cloud. Take a look at the Databricks Connector for Confluent Cloud documentation and take it for a spin on Databricks without cost by signing up for a 14-day trial.