Operating Quick SQL on DynamoDB Tables

Have you ever ever needed to run SQL queries on Amazon DynamoDB tables with out impacting your manufacturing workloads? Would not it’s nice to take action with no need to arrange an ETL job after which having to manually monitor that job?

On this weblog, I’ll focus on how Rockset integrates with DynamoDB and repeatedly updates a group mechanically as new objects are added to a DynamoDB desk. I’ll stroll via steps on the way to arrange a stay integration between Rockset and a DynamoDB desk and run millisecond-latency SQL on it.

DynamoDB Integration

Amazon DynamoDB is a key-value and doc database the place the hot button is specified on the time of desk creation. DynamoDB helps scan operations over a number of objects and in addition captures desk exercise utilizing DynamoDB Streams. Utilizing these options, Rockset repeatedly ingests knowledge from DynamoDB in two steps:

- The primary time a consumer creates a DynamoDB-sourced assortment, Rockset does a whole scan of the DynamoDB desk.

- After the scan finishes, Rockset repeatedly processes DynamoDB Streams to account for brand new or modified information.

To make sure Rockset doesn’t lose any new knowledge which is recorded within the DynamoDB desk when the scan is going on, Rockset allows strongly constant scans within the Rockset-DynamoDB connector, and in addition creates DynamoDB Streams (if not already current) and information the sequence numbers of present shards. The continual processing step (step 2 above) processes DynamoDB Streams ranging from the sequence quantity recorded earlier than the scan.

Main key values from the DynamoDB desk are used to assemble the _id area in Rockset to uniquely establish a doc in a Rockset assortment. This ensures that updates to an present merchandise within the DynamoDB desk are utilized to the corresponding doc in Rockset.

Connecting DynamoDB to Rockset

For this instance, I’ve created a DynamoDB desk programmatically utilizing a Hacker Information knowledge set. The info set consists of information about every publish and touch upon the web site. Every area within the dataset is described right here. I’ve included a pattern of this knowledge set in our recipes repository.

The desk was created utilizing the id area because the partition key for DynamoDB. Additionally, I needed to therapeutic massage the info set as DynamoDB does not settle for empty string values. With Rockset, as you will note within the subsequent few steps, you need not carry out such ETL operations or present schema definitions to create a group and make it instantly queryable by way of SQL.

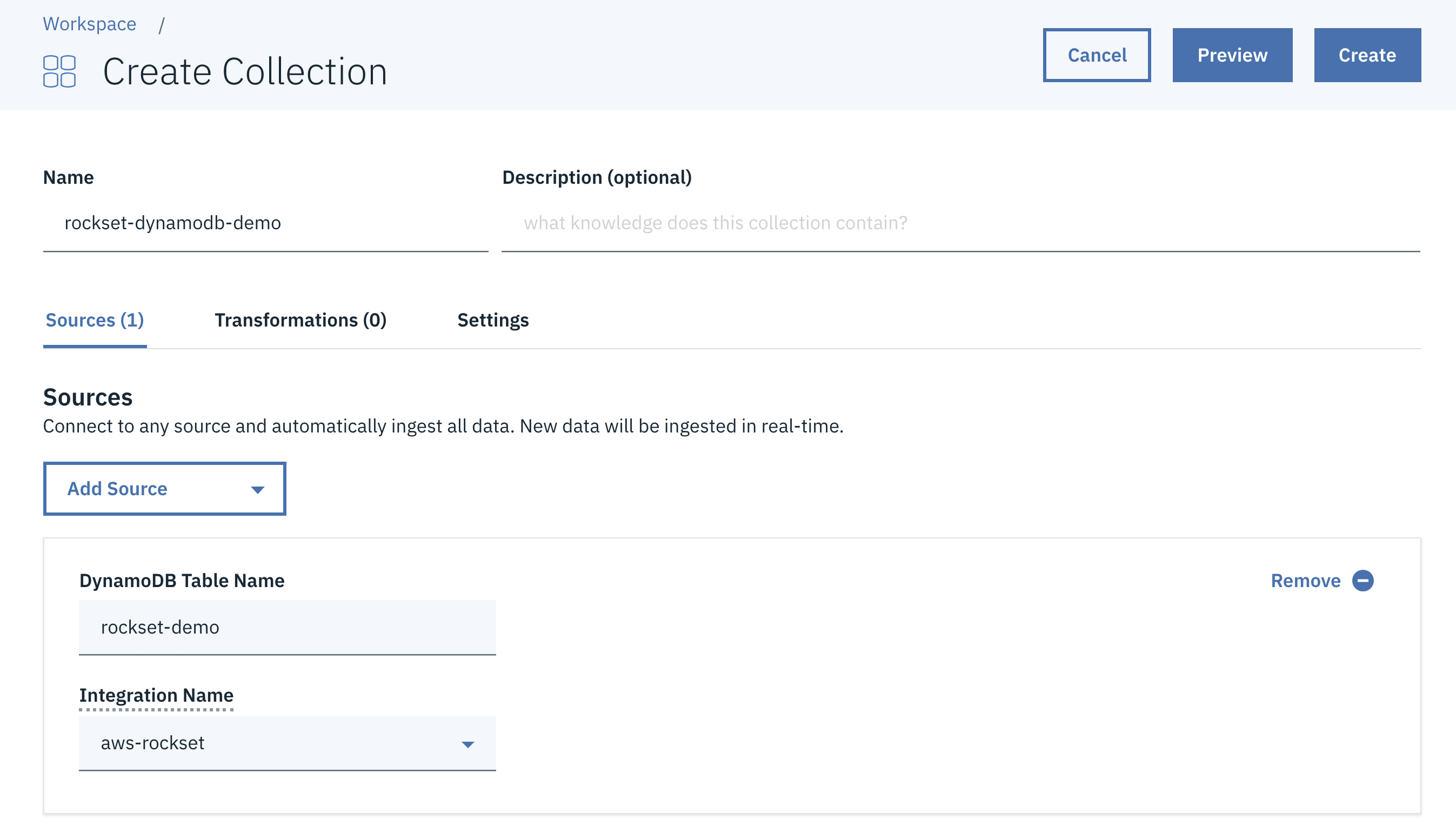

Making a Rockset Assortment

I’ll use the Rockset Python Consumer to create a group backed by a DynamoDB desk. To do that in your setting, you have to to create an Integration (an object that represents your AWS credentials) and arrange related permissions on the DynamoDB desk, which permits Rockset to carry out sure learn operations on that desk.

from rockset import Consumer

rs=Consumer(api_key=...)

aws_integration=rs.Integration.retrieve("aws-rockset")

sources=[

rs.Source.dynamo(

table_name="rockset-demo",

integration=aws_integration)]

rockset_dynamodb_demo=rs.Assortment.create("rockset-dynamodb-demo", sources=sources)

Alternatively, DynamoDB-sourced collections may also be created from the Rockset console, as proven beneath.

Operating SQL on DynamoDB Knowledge

Every doc in Rockset corresponds to at least one row within the DynamoDB desk. Rockset mechanically infers the schema, as proven beneath.

rockset> describe "rockset-dynamodb-demo";

+---------------------------------+---------------+----------+-----------+

| area | occurrences | whole | sort |

|---------------------------------+---------------+----------+-----------|

| ['_event_time'] | 18926775 | 18926775 | timestamp |

| ['_id'] | 18926775 | 18926775 | string |

| ['_meta'] | 18926775 | 18926775 | object |

| ['_meta', 'dynamodb'] | 18926775 | 18926775 | object |

| ['_meta', 'dynamodb', 'table'] | 18926775 | 18926775 | string |

| ['by'] | 18926775 | 18926775 | string |

| ['dead'] | 890827 | 18926775 | bool |

| ['deleted'] | 562904 | 18926775 | bool |

| ['descendants'] | 2660205 | 18926775 | string |

| ['id'] | 18926775 | 18926775 | string |

| ['parent'] | 15716204 | 18926775 | string |

| ['score'] | 3045941 | 18926775 | string |

| ['text'] | 18926775 | 18926775 | string |

| ['time'] | 18899951 | 18926775 | string |

| ['title'] | 18926775 | 18926775 | string |

| ['type'] | 18926775 | 18926775 | string |

| ['url'] | 18926775 | 18926775 | string |

+---------------------------------+---------------+----------+-----------+

Now we’re able to run quick SQL on knowledge from our DynamoDB desk. Let’s write a number of queries to get some insights from this knowledge set.

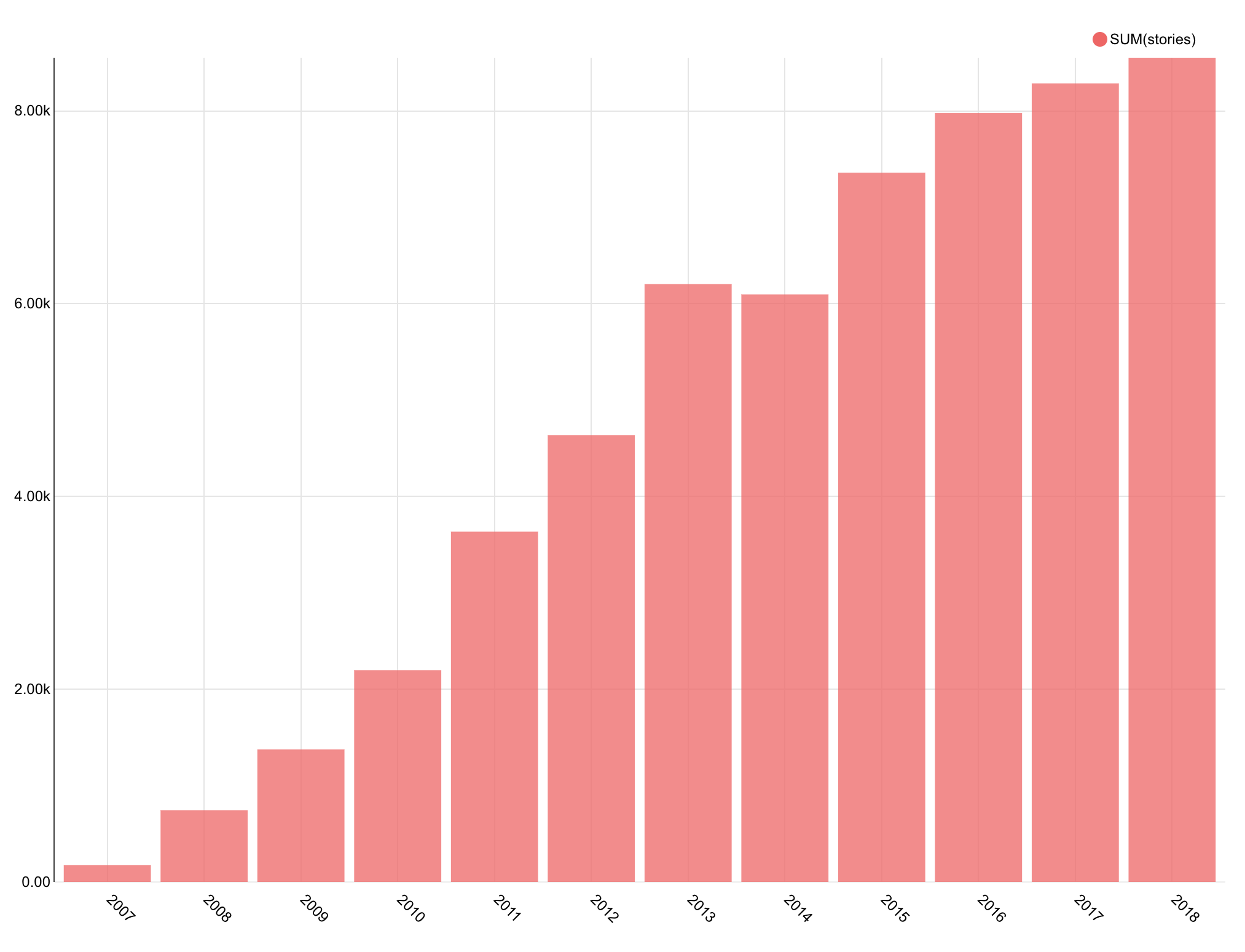

Since we’re clearly within the matter of information, let’s examine how regularly folks have mentioned or shared about “knowledge” on Hacker Information over time. On this question, I’m tokenizing the title, extracting the 12 months from the the time area, and returning the variety of occurrences of “knowledge” within the tokens, grouped by 12 months.

with title_tokens as (

choose rows.tokens as token, subq.12 months as 12 months

from (

choose tokenize(title) as title_tokens,

EXTRACT(YEAR from DATETIME(TIMESTAMP_SECONDS(time::int))) as 12 months

from "rockset-dynamodb-demo"

) subq, unnest(title_tokens as tokens) as rows

)

choose 12 months, depend(token) as tales

from title_tokens

the place decrease(token) = 'knowledge'

group by 12 months order by 12 months

+-----------+------+

| tales | 12 months |

|-----------+------|

| 176 | 2007 |

| 744 | 2008 |

| 1371 | 2009 |

| 2192 | 2010 |

| 3624 | 2011 |

| 4621 | 2012 |

| 6164 | 2013 |

| 6020 | 2014 |

| 7224 | 2015 |

| 7878 | 2016 |

| 8159 | 2017 |

| 8438 | 2018 |

+-----------+------+

Utilizing Apache Superset integration with Rockset, I plotted a graph with the outcomes. (It’s doable to make use of knowledge visualization instruments like Tableau, Redash, and Grafana as effectively.)

The variety of tales regarding knowledge has clearly been growing over time.

Subsequent, let’s mine the Hacker Information knowledge set for observations on one of the talked-about applied sciences of the previous two years, blockchain. Let’s first examine how consumer engagement round blockchain and cryptocurrencies has been trending.

with title_tokens as (

choose rows.tokens as token, subq.12 months as 12 months

from (

choose tokenize(title) as title_tokens,

EXTRACT(YEAR from DATETIME(TIMESTAMP_SECONDS(time::int))) as 12 months

from "rockset-dynamodb-demo"

) subq, unnest(title_tokens as tokens) as rows

)

choose 12 months, depend(token) as depend

from title_tokens

the place decrease(token) = 'crypto' or decrease(token) = 'blockchain'

group by 12 months order by 12 months

+---------------+--------+

| depend | 12 months |

|---------------+--------|

| 6 | 2008 |

| 26 | 2009 |

| 35 | 2010 |

| 43 | 2011 |

| 75 | 2012 |

| 278 | 2013 |

| 431 | 2014 |

| 750 | 2015 |

| 1383 | 2016 |

| 2928 | 2017 |

| 5550 | 2018 |

+-----------+------------+

As you’ll be able to see, curiosity in blockchain went up immensely in 2017 and 2018. The outcomes are additionally aligned with this research, which estimated that the variety of crypto customers doubled in 2018.

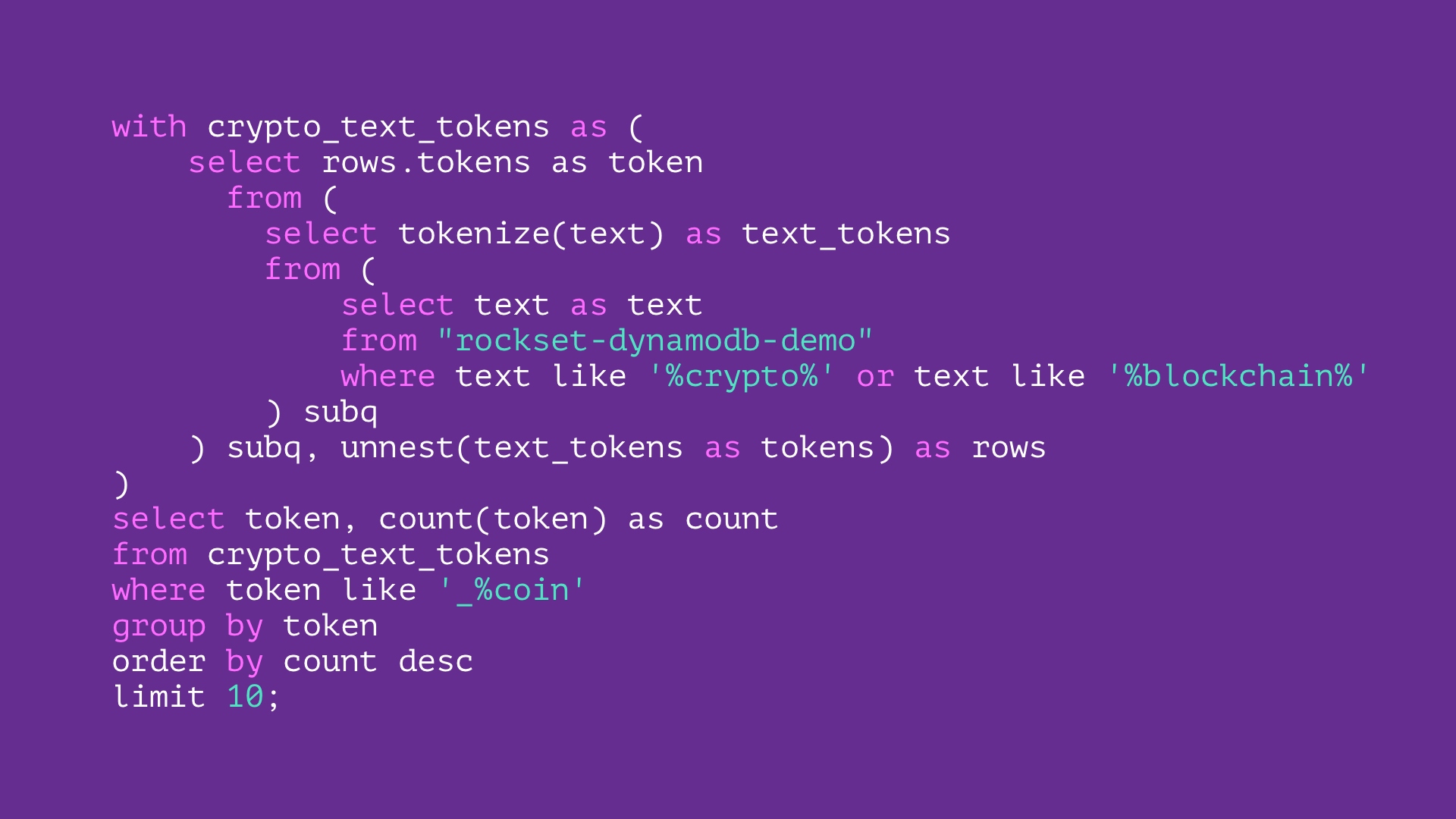

Additionally, together with blockchain, lots of of cryptocurrencies emerged. Let’s discover the most well-liked cash in our knowledge set.

with crypto_text_tokens as (

choose rows.tokens as token

from (

choose tokenize(textual content) as text_tokens

from (

choose textual content as textual content

from "rockset-dynamodb-demo"

the place textual content like '%crypto%' or textual content like '%blockchain%'

) subq

) subq, unnest(text_tokens as tokens) as rows

)

choose token, depend(token) as depend

from crypto_text_tokens

the place token like '_percentcoin'

group by token

order by depend desc

restrict 10;

+---------+------------+

| depend | token |

|---------+------------|

| 29197 | bitcoin |

| 512 | litecoin |

| 454 | dogecoin |

| 433 | cryptocoin |

| 362 | namecoin |

| 239 | altcoin |

| 219 | filecoin |

| 122 | zerocoin |

| 81 | stablecoin |

| 69 | peercoin |

+---------+------------+

Bitcoin, as one would have guessed, appears to be the most well-liked cryptocurrency in our Hacker Information knowledge.

Abstract

On this total course of, I merely created a Rockset assortment with a DynamoDB supply, with none knowledge transformation and schema modeling, and instantly ran SQL queries over it. Utilizing Rockset, you can also be a part of knowledge throughout completely different DynamoDB tables or different sources to energy your stay functions.

Different DynamoDB assets: